Dataset import

Concepts¶

Dataset Import is the primary way in which data be loaded into the platform and in which new Datasets are created. The import process, same as any other conversion, is tuned towards processing large files (MB or GB in volume). The client must complete the following steps in order to import data to the platform:

- Get URL for intermediate upload location

- Upload input file to the intermediate location (e.g. preferably using some kind of Azure Blob Storage SDK)

- Create Transfer

- Check

Transfer.Statusand wait for Import to be Completed - Check

Transfer.ImportResultsfor result dataset of the import

When a transfer is completed, a new Dataset will be registered in the metadata service and data will be loaded to the specified storage.

Examples¶

1. Getting URL for intermediate upload location¶

GET/api/conversion/transfer/upload-url

Returns azure blob storage container url for upload as string

2. Uploading file to intermediate location¶

Use standard azure blob storage nuget package to upload file to the container. Minimal .Net sample

var blob = new CloudBlockBlob(new Uri(sasUrl));

await blob.UploadFromFileAsync(localFilePath);

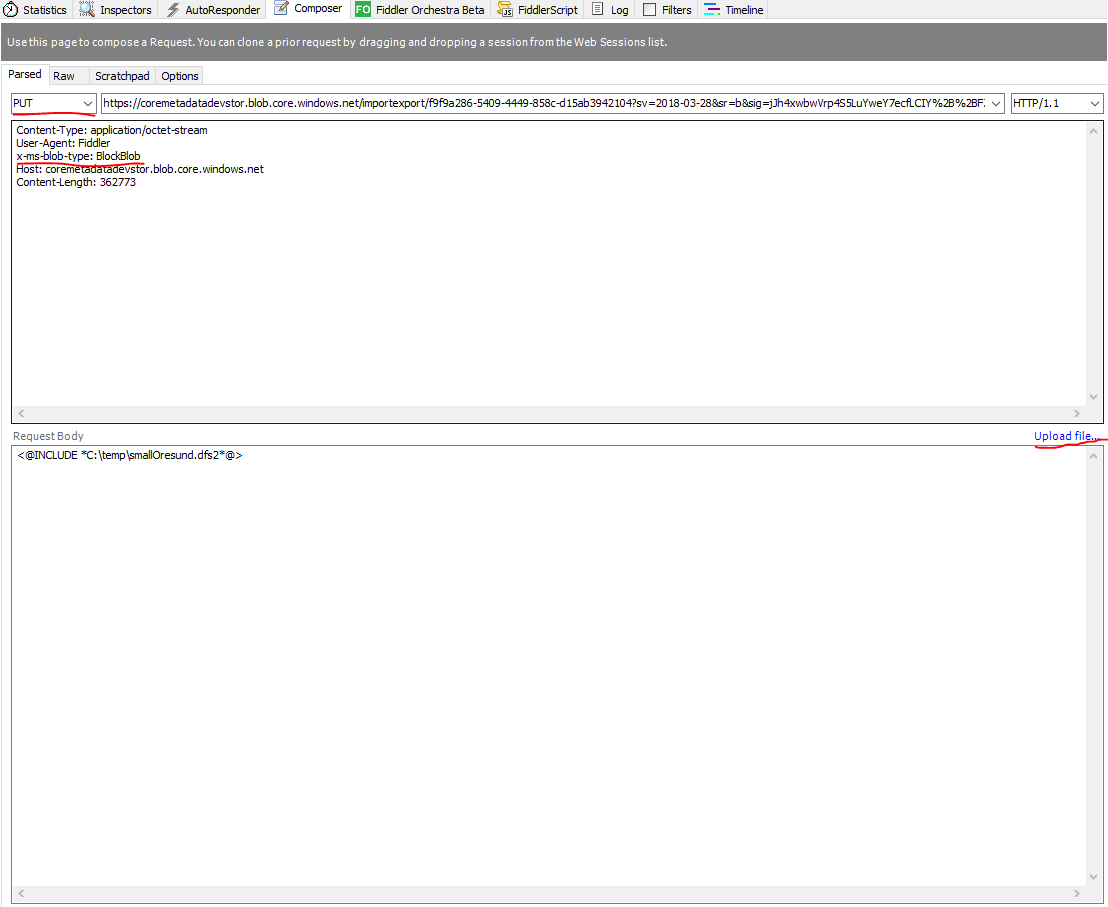

Similar code is available in Javascript Azure blob storage client The URL can also be used to upload bytes without using Azure libraries. Here is an example how to upload file without Azure library but with Telerik Fiddler:

HTTP PUT : <sasUrl>

Content-Type: application/octet-stream

User-Agent: Fiddler

x-ms-blob-type: BlockBlob

Host: coremetadatadevstor.blob.core.windows.net

Content-Length: 362773

Request Body

<@INCLUDE *C:\temp\smallOresund.dfs2*@>

3. Create Import Transfer item¶

This section descibes how to create an import transfer before February 2020. Since February 2020, initiate a conversion instead.

When file is uploaded to intermediate storage the import processing request must be created.

POST/api/conversion/upload

{

"format": "file",

"uploadUrl": "https://....",

"fileName": "someFile.ext",

"destinations": [

"Project"

],

"datasetImportData": {

"name": "test dataset",

"description": "test description"

}

}

Mandatory members:¶

- format : supported values are "file" ,"gdalvector" now

- uploadUrl : the upload url can be:

- URL from step 1 without query part

- URL to a blob in Azure Blob Storage with a valid SAS token

- URL of an ftp file including credentials like

ftp://user:password@path/to/file.extall Url Encoded - fileName : usually the original filename

- destinations : array of targets where to store uploaded data

- project : file in Project storage (stored as a file in Data Lake Store)

- dedicated : Core Services structured storage

- datasetImportData : contains properties of dataset being created , Name is mandatory

Optional members¶

- projectId - required when destinations contains Project or Dedicated values

- appendDatasetId

- srid

Custom arguments¶

Optionally there can be specific argument for selected format - arguments